Imagine a world where your AI systems can think, decide, and adapt like a human team, tackling complex tasks without being chained to a single tech giant’s ecosystem. That’s the promise of agentic AI. You have systems that act autonomously, make decisions, and solve problems with minimal human input.

Picture an AI that analyzes sensitive data, processes claims, or assists in diagnostics autonomously. Sounds powerful, right? Well, there’s a catch. Many of these systems come tethered to traditional hyperscalers – giant cloud providers like AWS, Azure, or Google Cloud – that can lock you into their infrastructure. The problem here is that this means higher costs, less flexibility, and dependency on one provider’s roadmap.

In this post, we’ll explore how to harness the power of agentic AI while staying free from hyperscaler lock-in, using open-source frameworks like CrewAI and tools like LiteLLM to keep your options open in a fast-evolving AI landscape.

This is the coming Decade of Agentic AI

Agentic AI is transforming how businesses operate. Unlike traditional AI, which might analyze data or generate text, agentic AI is proactive and goal-driven. It can orchestrate complex workflows, like a digital project manager who doesn’t need coffee breaks. The downside is that many agentic frameworks, like Microsoft’s AutoGen, are designed to work best (or only) with specific AI models or cloud platforms.

AutoGen, for instance, ties closely to OpenAI’s APIs, limiting you to their models and pricing structure. If their costs spike or a better model emerges elsewhere, you’re stuck. This lock-in isn’t just inconvenient; it’s a strategic risk. Businesses can’t afford to be at the mercy of one provider when the AI market is moving so fast.

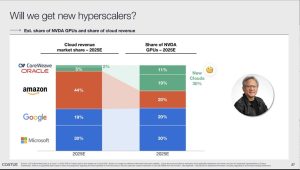

Hyperscalers & NeoCloud Providers

While traditional hyperscalers like AWS, Azure, and Google Cloud dominate traditional IAAS and Platform as a Service workloads, a growing cohort of neo-cloud providers such as CoreWeave, Lamda, G42, and Humain are emerging, building GPU-heavy clouds centered around open-source technologies as viable alternatives that reduce dependency and promote flexibility.

Chart Credit: Coatue

Competition amongst State-of-the-Art Models

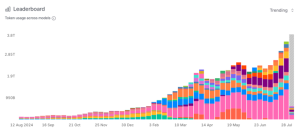

Platforms like OpenRouter are completely changing the game. OpenRouter provides a middleman that routes AI tasks to various models, without needing to hardcode to a specific AI model provider.

OpenRouter’s data gives us a window into how fluid this space is. As of mid-2025, OpenRouter processes more than 100 trillion tokens annually and serves over 2.5 million developers. Sacra estimates that OpenRouter hit $5 million in annualized revenue in May 2025, up from $1 million at the end of 2024 – a whopping 400% growth spurt.

Source: https://openrouter.ai/rankings

Their rankings show model popularity shifting constantly – one snapshot has top models handling billions of tokens with usage spikes up to 31% in a month, as providers like Mistral and DeepSeek release updates that outpace competitors.

What does this tell us? It’s a highly competitive market. Model dominance is temporary – Llama was popular last year – Claude & Gemini might be hot today, but tomorrow it could be Grok, OpenAI’s upcoming ChatGpt 5 – or a new entrant taking the lead as capabilities leapfrog. Betting your entire AI strategy on one model or hyperscaler is like picking a single stock and hoping it never tanks. Good luck with that strategy!

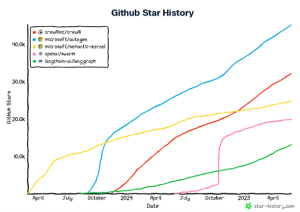

Open-source agentic frameworks: CrewAI

So, how do you stay flexible? Enter open-source agentic frameworks. One that’s definitely stealing the spotlight at the moment is CrewAI.

Unlike provider-specific tools, CrewAI is built to be model-agnostic, letting you plug in any LLM without being chained to a hyperscaler. CrewAI ranks among the top open-source agentic frameworks, alongside LangGraph and Microsoft AutoGen, praised for its simplicity in orchestrating role-playing AI agents.

CrewAI’s documentation emphasizes compatibility with multiple LLMs, including local models via tools like Ollama, making it a go-to for businesses wanting control over their AI stack.

Compared to frameworks like AutoGen and Swarm, which lean heavily on OpenAI, CrewAI’s open-source nature means you’re not stuck paying one provider’s premium or stuck with legacy code locking you into last season’s (now obsolete) AI model. I’m picturing CrewAI as a Swiss Army knife for Agentic AI – it’s versatile and ready for anything.

On-Premises & Hybrid Cloud AI Model Routers

Now, let’s talk about another game-changer. On-premises model routers. Enter LiteLLM, LiteLLM takes the OpenRouter concept and brings it on premises, enabling hybrid AI scenarios.

If the AI model market is a race with providers constantly overtaking each other, LiteLLM is your pit crew, letting you swap engines mid-race without crashing. It supports over 100 models, from OpenAI’s GPT to Anthropic’s Claude to open-source options like Llama, and even local/on-premises deployments via providers like VLLM or NVIDIA’s Triton Inferencing Server with models downloaded from HuggingFace, as well as those from AWS Bedrock, OpenAI or GCP. This simplifies switching models or providers, ensuring adaptability and cost efficiency.

OpenRouter’s data backs this up. Its token usage shows developers spreading tasks across models, not sticking to one, with explosive growth reflecting the need for such flexibility. LiteLLM’s strength lies in its ability to handle diverse setups, including routing to on-premises models that incur no ongoing cloud costs and are better suited for sensitive data.

Dealing with Sensitive Data

Let’s break down the practical benefits for businesses, focusing on scenarios where data can’t (or shouldn’t) live in the cloud.

Many industries deal with on-premises datasets due to privacy, regulations, or costs – think healthcare records in hospitals, proprietary research data in university labs, or insurance processing records. For one reason or another, these can’t be uploaded to hyperscalers without risking breaches or compliance issues.

Combining CrewAI and LiteLLM empowers businesses to build independent AI systems that respect these constraints.

Start with a university research lab studying rare genetic disorders. They have terabytes of genomic data stored on secure, on-premises servers. Here, privacy regulations like GDPR limit cloud migration options, and institutional or government policies keep it local.

With CrewAI, they set up AI agents to analyze patterns, predict protein interactions, or draft research summaries. One agent might sift through sequences for anomalies, while another cross-references literature. LiteLLM routes these tasks to an on-premises model, like a fine-tuned Llama 3 running on the lab’s GPU cluster. It means no cloud API and no recurring fees. If a new open-source model on HuggingFace outperforms it next month, LiteLLM’s support for over 100 models lets them switch seamlessly. This keeps data secure, avoids hyperscaler lock-in, and aligns with research needs where data sovereignty is key. These types of local setups preserve the benefits of AI while addressing privacy.

Now, consider an insurance company processing claims. Their proprietary datasets – decades of claims history, actuarial tables, and customer profiles – are stored on-premises for security and to dodge cloud storage fees.

CrewAI lets them create agents to automate claim validation, flag fraud, or predict risks – one agent reviewing documents, another assessing probabilities. LiteLLM directs these to a custom-trained Mistral model in their data center – for high-reasoning fraud detection, it routes to the local instance, while simpler tasks like formatting go to a lightweight open-source alternative.

This eliminates cloud costs and ensures compliance. Research on healthcare claims data highlights similar challenges in utilization analysis, where on-premises AI can generate real-world evidence without external dependencies. LiteLLM’s features, such as spend tracking, help monitor budgets in real-time, with users reporting 30–50% cost savings by optimizing local routing.

Finally, in healthcare, hospitals store patient records, imaging data, and clinical notes on-premises to comply with HIPAA since uploading to a cloud risks fines and breaches. A hospital could use CrewAI for agents that triage queries, analyze anonymized trends, or assist diagnostics: one agent reviews lab results, another generates doctor summaries. LiteLLM routes to an on-premises model like BioMedGPT, keeping data behind the firewall with zero cloud fees. If a better medical-reasoning model emerges, switching is effortless.

Cisco and Red Hat Solutions

To bring these concepts to life in enterprise environments, Cisco and Red Hat collaborate on validated full-stack architectures that streamline AI/ML operations, providing performant and predictable deployments without tying you to a single hyperscaler.

Red Hat OpenShift AI serves as a hyperscaler-independent platform for managing the lifecycle of predictive and generative AI models at scale across hybrid clouds. You can prototype AI workflows in the cloud for rapid iteration, then deploy consistently on-premises, ensuring seamless transitions and data sovereignty for sensitive setups like those in healthcare or research labs.

Red Hat OpenShift AI also includes notable features like the LLM Compressor, which optimizes large language models through techniques such as quantization and sparsity for memory model compression, enabling cheaper inference with fewer hardware resources. This is particularly useful for on-premises deployments where resource efficiency matters.

Cisco validates these on both Cisco UCS hardware, and Cisco Cloud Managed AI Clusters (Hyperfabric AI), offering a turnkey way to build AI Platforms and integrate tools like CrewAI and LiteLLM while avoiding the hyperscaler lock-in trap

My point of view – don’t get left behind in this shifting market, it’s definitely worth exploring these today. Your AI stack will thank you.