As someone who’s been knee-deep with AI at Cisco, I’ve seen trends come and go, and today I want to talk about one that’s starting to feel a bit dated: Retrieval-Augmented Generation, or RAG. Don’t get me wrong—RAG has been a game-changer for making LLMs more accurate by pulling in external knowledge and combining that with the knowledge learned by the LLM. But as we push the boundaries of what AI can do, I’m excited about: Multi-Context Processing, or MCP. It’s not hype; it’s just the next logical step in building smarter, more scalable systems. Let me explain why I think RAG might be yesterday’s news, and how MCP could help us tackle real-world challenges more effectively.

First, a quick refresher on RAG for context. Imagine you’re in a massive library, and you ask a question of the librarian (that’s the LLM). The librarian is a super informed person, but their knowledge is limited to what they have learned previously. If you ask them a question about something more recent that they did not know the answer to, they might hallucinate (eg. guess) if they don’t know the answer. Here is where RAG comes in and can help.

Retrieval: If the librarian doesn’t know the answer, they run off to search a filing cabinet for relevant new books that haven’t yet made it into the library, grab a few excerpts to get all the info needed to answer the question.

Augmentation: The librarian then takes that info and weaves (generates) that info into their answer.

RAG is efficient for injecting fresh info into models that might otherwise hallucinate or rely on outdated training data to answer your question.

But here’s the rub: as queries get more complex, that library search can become a bottleneck. Imagine you ask a follow up question, and the answer the LLM needed wasn’t in the relevant books it went searching for just a moment ago.

A basic RAG system might struggle on the follow up question if the “books” it initially pulled for the first question didn’t contain information about the team’s future schedule. The system has to start the search process over, and if the follow-up question relies heavily on context from previous turns in the conversation, a simple RAG approach might also not efficiently connect those dots.

Enter MCP, which flips the script with a more organized approach. Working with MCP is more akin to a traditional 3-tier application – User (browser), middleware (MCP Server), database (LLM). Think of it like swapping the chaotic library for a well-maintained filing cabinet right in your office. Each drawer represents a curated context – pre-processed, indexed knowledge that’s readily accessible without the need for constant retrieval runs. This isn’t about replacing the LLM; it’s about enhancing it with persistent, multi-layered contexts that stick around across interactions. At Cisco, we’re working with Anthropic on several MCP implementations.

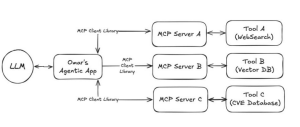

What really sets MCP apart is its integration with tools via dedicated servers. These MCP servers act as the brain’s executive function, not just fetching data but actively using tools to reason and respond. For example, imagine an MCP server tapping into a SQL database for a quick lookup on customer metrics, or searching a PDF knowledge library to extract policy details. The MCP Server gives the client access to Tools; the server then handles the orchestration seamlessly, deciding when to query the LLM, when to invoke a tool, and how to blend the results. This modularity means we’re not locked into a single retrieval method; we can mix and match based on the task.

Looking ahead, I believe the future isn’t just chatting with a RAG-powered bot that does a simple lookup. This is what Agentic is all about; it’s about building agentic systems with autonomous setups where the MCP server takes the reins, interfacing with LLMs and tools dynamically. This setup scales “test-time compute,” meaning we can pour more processing power into reasoning during inference without bloating the model itself. It also ramps up tool usage in the reasoning loop, allowing for iterative problem-solving.

Picture an AI agent at your company troubleshooting an issue: the Reasoning Agent (that’s the Agentic App in the picture below) uses the MCP server to pull historical logs via SQL or Splunk, cross-references them with a PDF search of internal policies and procedures – consults the LLM for insights, and loops back if needed, all without human intervention. It’s practical scaling that addresses real enterprise needs, like handling massive data volumes on-site without needing to move the data.

Of course, this isn’t without challenges. Implementing MCP requires thoughtful design to avoid context overload or tool integration hiccups. At Cisco, we’re committed to leading in AI innovation, ensuring our solutions are reliable and ethical. We’re investing in these agentic frameworks, including A2A and AGNTCY, to help create the next generation of Agentic protocols, making AI feel less like a black box and more like a trusted colleague.

In the end, MCP isn’t about ditching RAG entirely—it’s evolution. It’s a humble reminder that AI is a journey, and we’re all figuring it out together. What do you think? Have you experimented with similar concepts? Drop a comment below—I’d love to hear your take.

For more technical detail, read this blog post by @Omar Santos https://community.cisco.com/t5/security-blogs/ai-model-context-protocol-mcp-and-security/ba-p/5274394

Looking ahead to the future, agents utilizing MCP and LLMs, running in a distributed world (just like the internet does today. In that world, we’ll need not just MCP, but new agent protocols developed to handle identification/discovery, verification & trust, observability and interoperability. Cisco is actively contributing to AGNTCY.ORG and A2A to further the development of the Internet of Agents protocols.