Today’s guest post comes from Dr. Joshua Hursey, an Assistant Professor in the Computer Science Department at the University of Wisconsin, La Crosse.

For a number of years, developers tuning High Performance Computing (HPC) applications and libraries have been harnessing server topology information to significantly optimize performance on servers with increasingly complex memory hierarchies and increasing core counts. Tools such as the Portable Hardware Locality (hwloc) project provide these applications with abstract, yet detailed, information of the single-server topology. But that is where the topology analysis often stops — neglecting the one of the largest components of the HPC environment, namely: the network.

For a number of years, developers tuning High Performance Computing (HPC) applications and libraries have been harnessing server topology information to significantly optimize performance on servers with increasingly complex memory hierarchies and increasing core counts. Tools such as the Portable Hardware Locality (hwloc) project provide these applications with abstract, yet detailed, information of the single-server topology. But that is where the topology analysis often stops — neglecting the one of the largest components of the HPC environment, namely: the network.

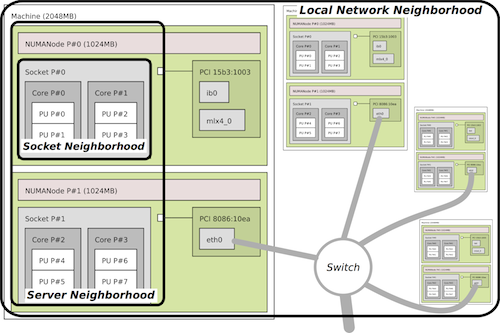

Today, I am excited to announce the launch of the Network Locality (netloc) Project. The netloc project provides applications with the ability to discover the network topology of their HPC cluster, and access an abstract representation of that topology (as a graph). Additionally, by combining the hwloc single-server topology data with the network topology data, netloc provides developers with a comprehensive view of the HPC system topology, spanning from the processor cores in one server all the way to the cores in another – including the complex network(s) in between.

This first public release of the netloc project includes support for OpenFlow-managed Ethernet and InfiniBand networks. The modular architecture of the netloc project makes it easy to add support for other network types and discovery techniques by adding a reader plugin/tool for that network. We are actively working on support for other networks types, and are looking for contributors eager to help.

Applications interact with the network topologies through the netloc C API, which is logically segmented into four parts:

- The data collection API is used by the reader tools to store network topology information in a network independent format.

- The metadata discovery API is used to access information about the network types and subnets available on the system.

- The topology query API is used to access to specific information about nodes and edges in a single network subnet graph.

- The map API is used to move between server topology and network topology data structures, and perform some basic queries across these domains (e.g., what are the data paths from core 1 on server A to core 2 on server B if there are multiple NICs available?).

Netloc provides information not only about the physical nodes and edges in the network topology graph, but also information about the logical paths (when available), which are important when there are multiple physical paths between peers. Additionally, netloc computes the shortest path through the network to support some of its basic query interfaces. Because the type of analysis (e.g., graph partitioning) is often application-specific, netloc limits the amount of analysis it performs and leaves further analysis to higher level applications and libraries built upon this service.

Today’s launch of netloc is the culmination of many months of conversation, design, and discovery. But netloc is still in its early days, and there is much work to be done. We are interested in feedback on the project, and developers willing to help transform this project into truly valuable asset for the HPC community.

The netloc project is an open-source project released under the Open MPI project umbrella. For more information about the project, including access to the v0.5 release, mailing lists, and source code follow the link below:

http://www.open-mpi.org/projects/netloc/

If you are at SC’13 this year, then be sure to stop by the Cisco booth for more information about this project. And check out the following presentations about netloc:

- Open MPI “State of the Union” BOF: Tuesday, 12:15-1:15pm in Rooms 301-302-303

- netloc: Towards a Comprehensive View of the HPC System Topology: Wednesday, 2pm Inria booth (#2116)

Unfortunately, I will not be attending SC13 this year, but my two collaborators, Jeff Squyres (Cisco) and Brice Goglin (Inria), will be attending. So be sure to find them for more information.

does netloc support ethernet network?

Yes; as it states in the article:

“This first public release of the netloc project includes support for OpenFlow-managed Ethernet and InfiniBand networks…”